Letting AIs run my Computer

I recently had a well upvoted comment on hacker news regarding an AI agent and, as is the way of the internet, somebody decided to stalk my online presence to find something unrelated to be mean about.

Sadly, in this case, they had a good point. I was posting about how useful coding agents were but my recently relaunched marketing site for my consultancy was a bit of a mess. My website builder (Hugo) had been giving me build headaches. As a result, it still had some references to localhost, typos, and other issues. To claim AI's great at coding while having a broken brand site's a bad look.

Sadly, in this case, they had a good point. I was posting about how useful coding agents were but my recently relaunched marketing site for my consultancy was a bit of a mess. My website builder (Hugo) had been giving me build headaches. As a result, it still had some references to localhost, typos, and other issues. To claim AI's great at coding while having a broken brand site's a bad look.

An Opportunity

This was a great chance to experiment with something new I’d wanted to play with for a bit, letting the computer take the wheel. Anthropic and OpenAI both announced versions of this in the last 6 months. They’re ostensibly plays on Robotic Process Automation. RPA is useful when you might have an existing application with no API surface area, and no ability to update the code, so the best way to integrate/automate with it is using an AI to click the buttons of the software.

This has been bubbling in the back of my mind since it was announced, because one of the key parts of using AI successfully is feedback loops. When something is changed, we need to immediately verify the change. That might be writing automated tests, compiling, checking snapshots, or a variety of other tools. There are situations where the verification is more abstract, such as reviewing a built webpage. Imagine a link is set to 0% opacity, or is hidden under modal. Just reviewing the generated code, or even runtime DOM, is actually pretty hard to see if it’s “rendered well.”

A Vision AI/RPA strategy lets a vision-based AI click around, poke at stuff, and see what breaks and what’s unexpected. It’s an entirely different mechanism for verification and QA. But is it any good?

The test

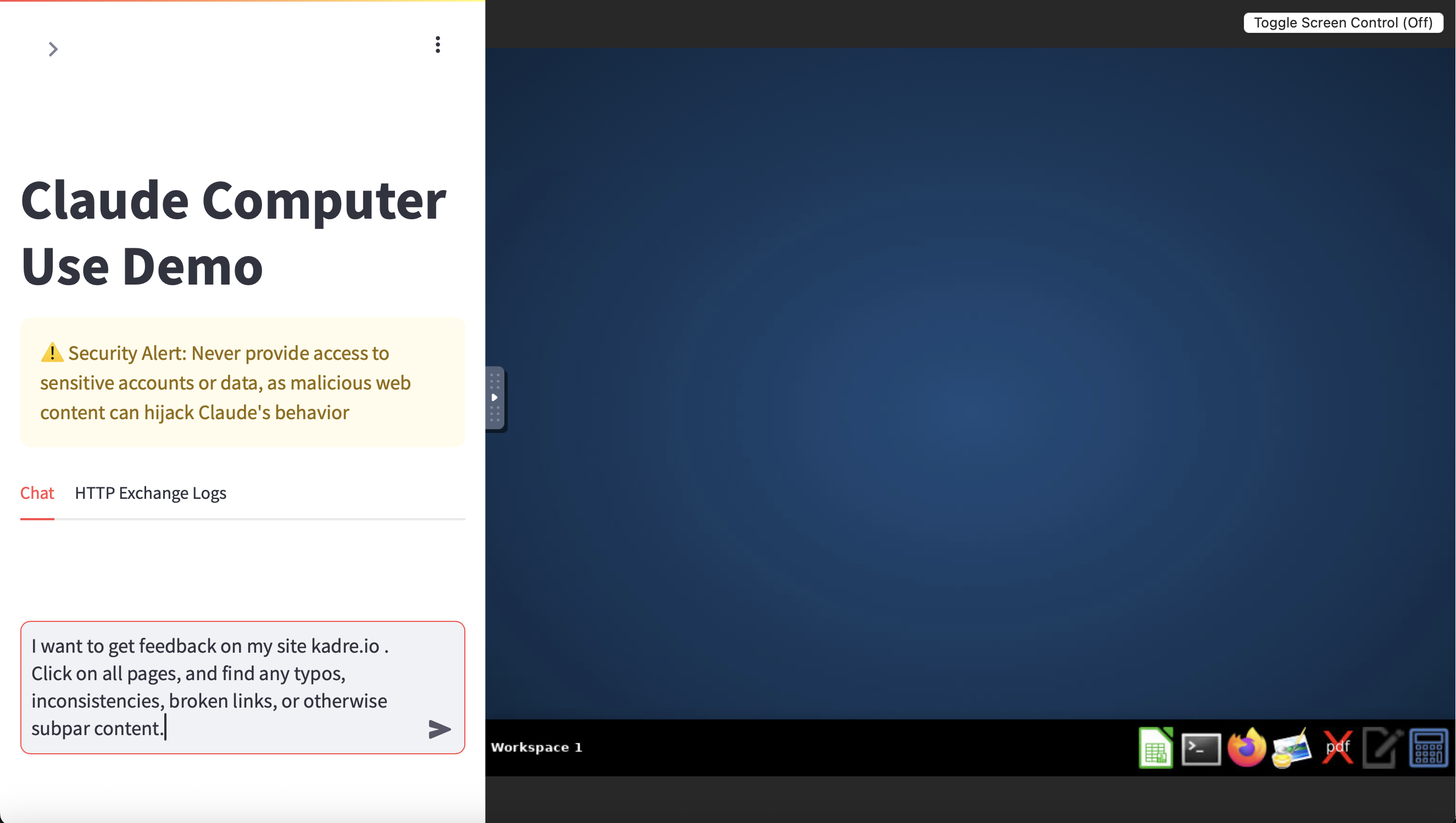

Anthropic has relatively easy tech demo to set up, with this one command I had a VM up and running with a sandboxed UI.

docker run \

-e ANTHROPIC_API_KEY=$KEY \

-v $HOME/.anthropic:/home/computeruse/.anthropic \

-p 8080:8080 \

-it ghcr.io/anthropics/anthropic-quickstarts:computer-use-demo-latest

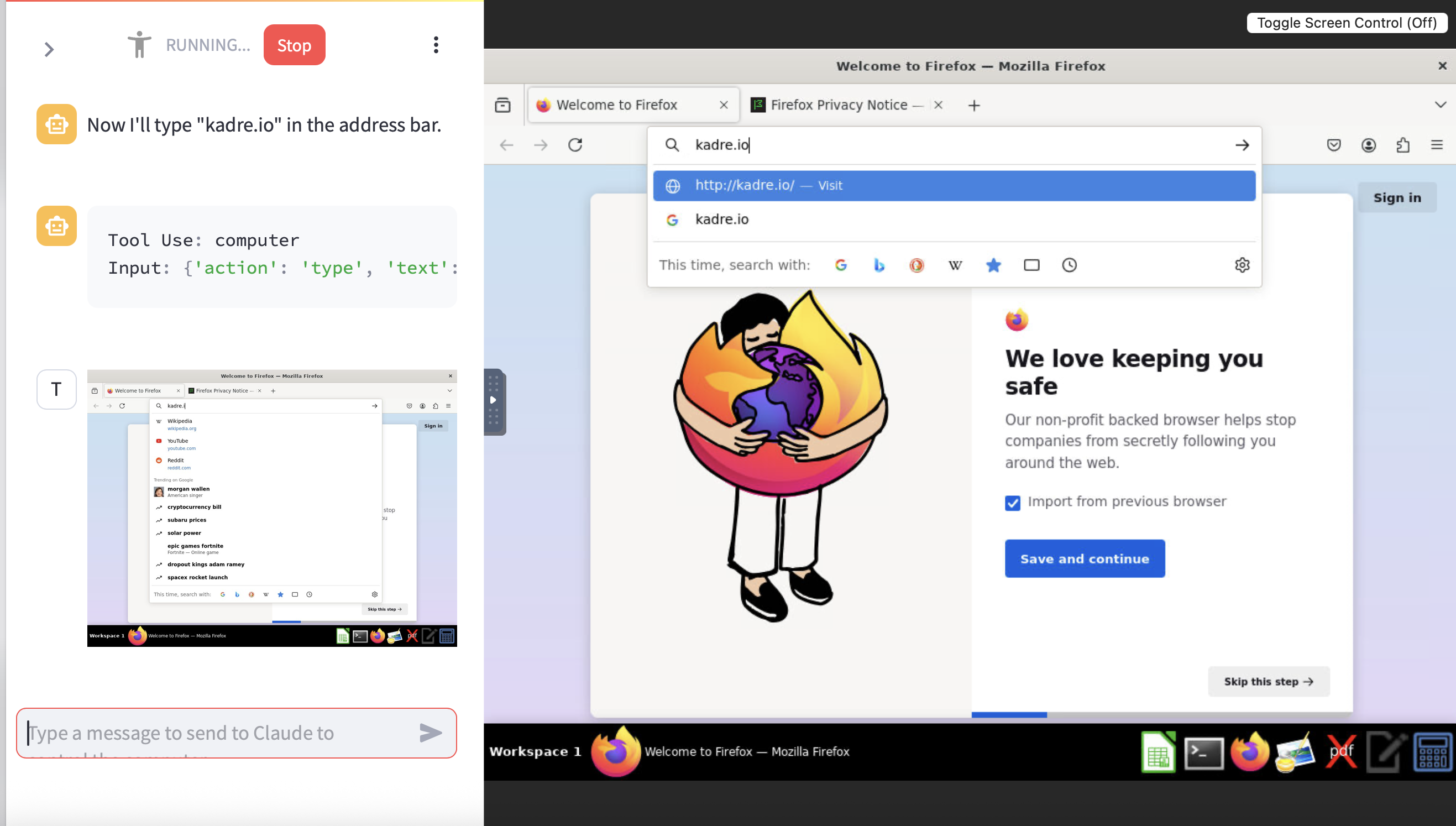

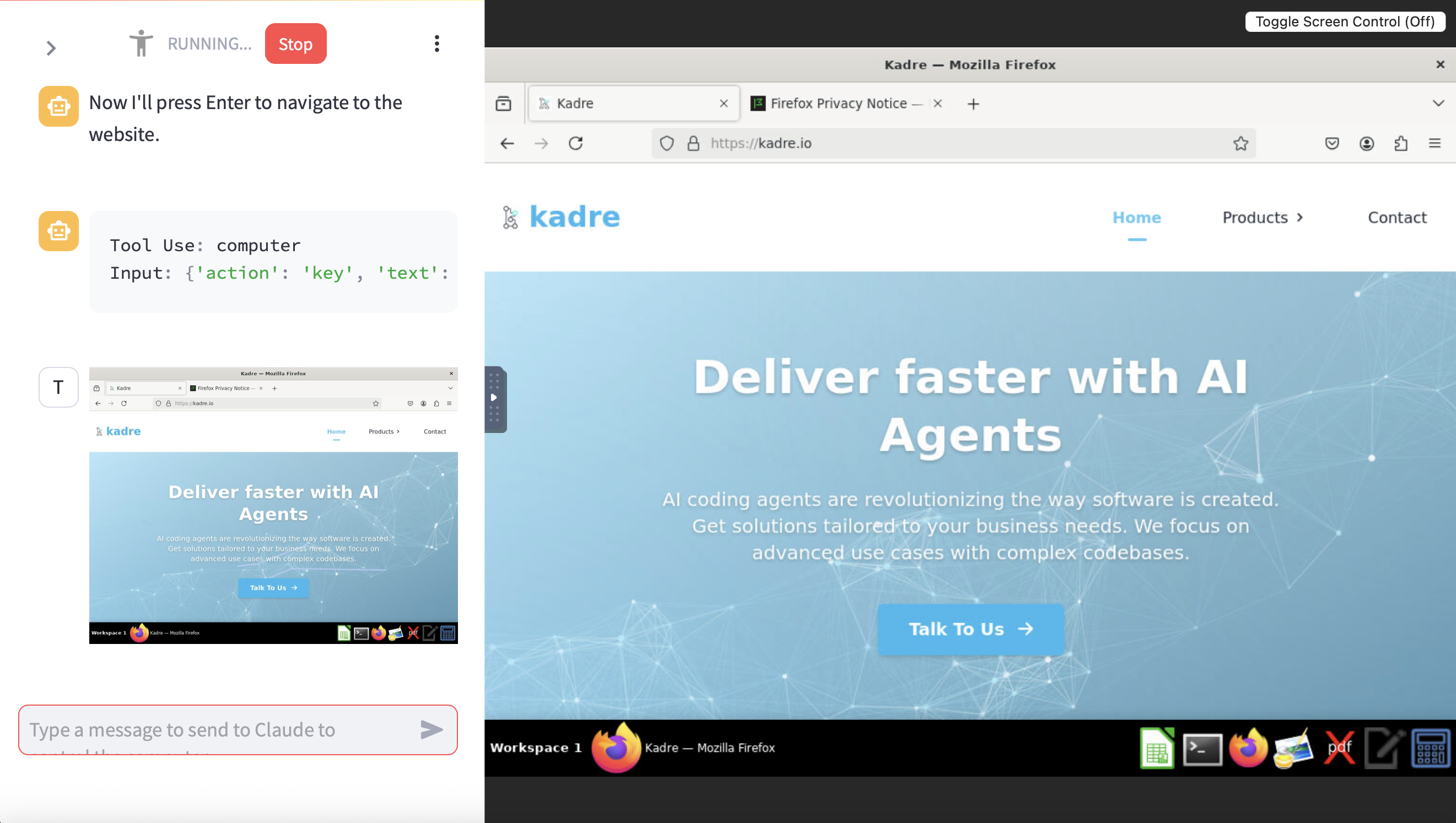

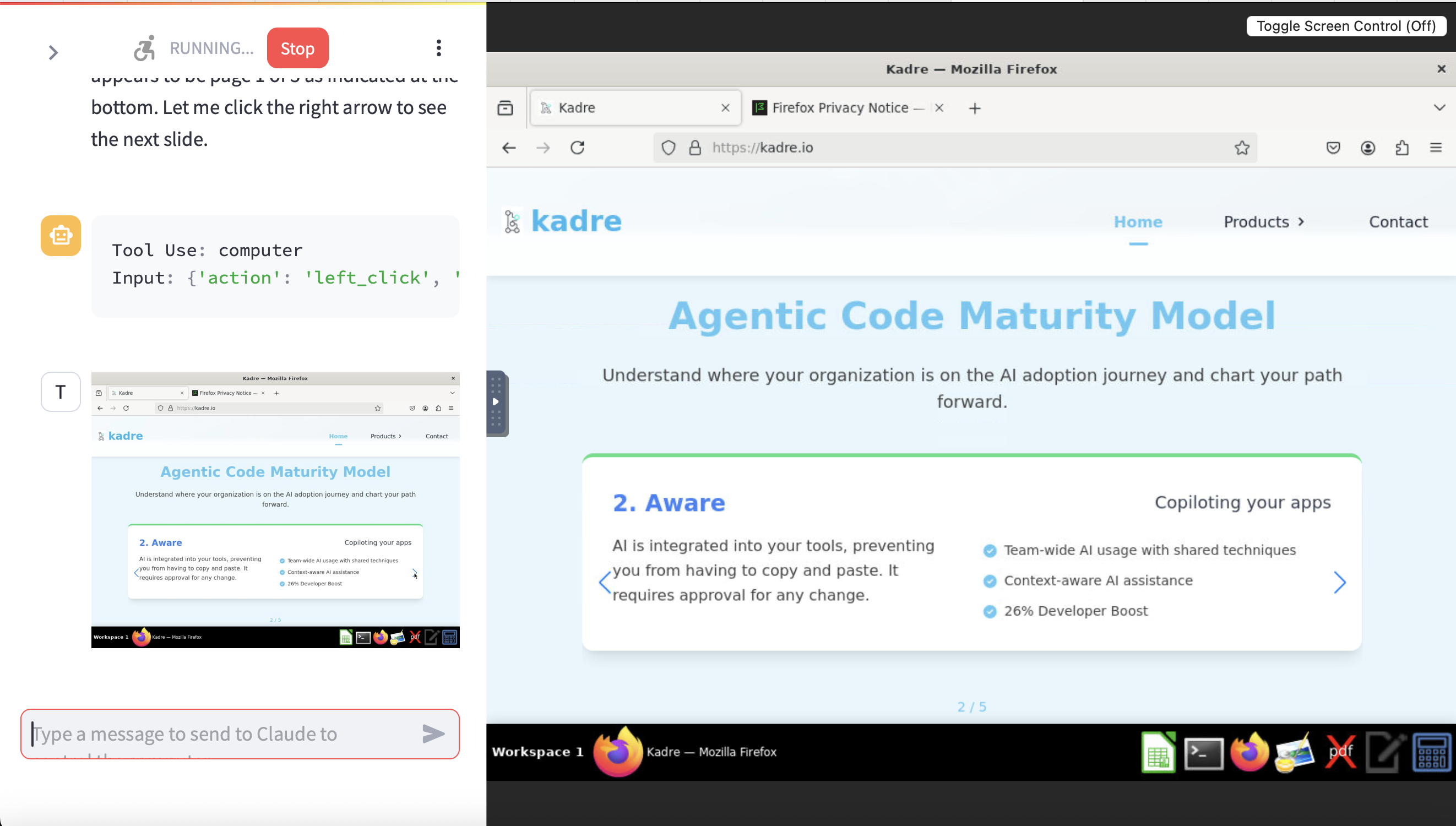

After giving it a very simple prompt to find issues with my site, it was off to the races, booting up firefox, navigating to my production site. It operates by taking a screenshot, “looking at it,” deciding on an action like typing in text, or hitting enter to navigate, and then screenshotting after the action is complete.

Screenshots of the navigationThe output

Review

After examining every page of the kadre.io website, I’ve compiled a comprehensive list of errors and potential improvements. The website is generally well-designed with a clean, modern aesthetic and clear value proposition focusing on AI implementation services. However, there are several areas where improvements could be made:

Key Issues Found:

Content Inconsistencies:

Product naming variations (“Human In The Loop” vs. “Human-in-Loop”) Typos including “Helo” in the Privacy Policy and “IDE Optmization” Possible misspelling of “Agentic” as “Agtentic” in some references

Broken footer icon

User Experience Concerns:

Google Forms requiring cookie consent creates friction in the contact process Carousel navigation lacks indicators for total slides/current position Limited contact options (no phone, chat support) Minimalist footer missing standard elements like copyright information

Missing Elements:

No favicon No pricing information on product pages No case studies or testimonials to build credibility Missing Terms of Use document (despite being referenced) No FAQ section to address common questions No newsletter signup despite mentioning it in the Privacy Policy Design Opportunities:

Product diagrams could be more sophisticated Call-to-action buttons could be more prominent Visual hierarchy could better distinguish between products Technology partner logos need context about relationships The site does have many positive aspects, including a clear information architecture, consistent branding, fast-loading pages, and logical navigation. With some refinements to address the identified issues, the overall user experience could be significantly enhanced., address the items that are quick fixes, and enumerate ones that need more feedback on how to fix

It then gave a full readout of the issues, in a structured format. I booted up the marketing site repository, opened Claude Code and pasted over the feedback, and it made all the changes it could.

These are the changes it made:

✅ Fix typo “Helo” in layouts/_default/single.html:10

✅ Fix typo “IDE Optmization” in content/english/products/ai-launchpad.md:27

✅ Standardize product naming (“Human In The Loop” vs. “Human-in-Loop”)

✅ Check for localhost references in URLs

✅ Add copyright information to footer

✅ Enhance carousel with navigation indicators

✅ List items requiring more feedback (pricing, case studies, etc.)

✅ Check for favicon

Takeaways

- It was very slow, maybe taking 10 seconds per action, 3 minutes to view a couple pages

- It gave good feedback on content, but didn’t identify “taste” issues. For instance I have a carousel on the main page that’s a bit ugly still.

- The feedback can be piped directly into another agent to take action

- If the page were behind a login, giving it access credentials would have been tricky. Oauth is a non starter

My next experiment is to index a large webapp, dealing with things like pure javascript onclick navigations, finding errors, verifying functionality. It hasn’t had a mass market breakout like chatbots and deep research, but computer use is a really good tool to have in your toolbelt.